Happy to share our latest paper titled “Text-RGNNs: Relational Modeling for Heterogeneous Text Graphs” published in IEEE Signal Processing Letters!

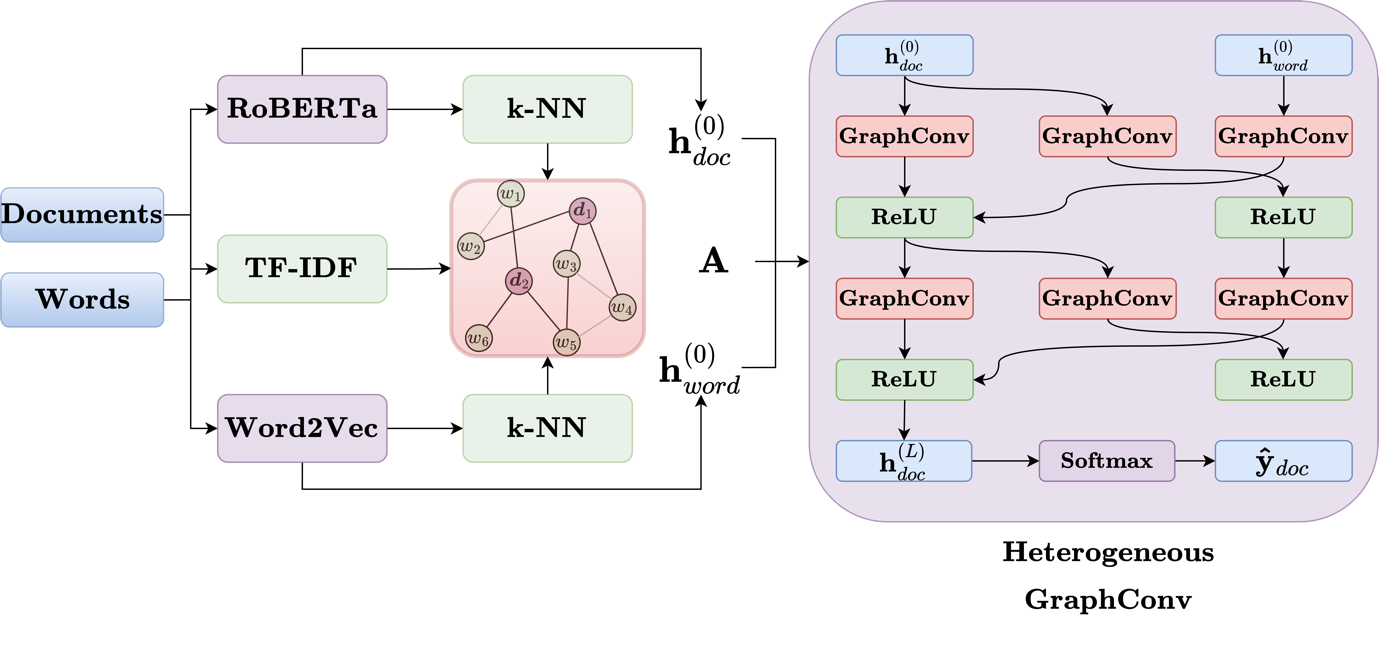

Building on the foundational Text-graph convolutional Network (TextGCN), which represents corpus with heterogeneous text graphs, we addressed a key limitation: GCNs are inherently designed to operate within homogeneous graphs, potentially limiting their performance. To overcome this, based on relational graph neural networks (RGNNs), we introduced Text-RGNNs, assigning dedicated weight matrices to each relation using heterogeneous GNNs for more nuanced and effective modeling. Our extensive experimentation shows that Text-RGNNs significantly outperform existing state-of-the-art models, especially with complete labeled nodes and minimal labeled training data, achieving up to 10.61% improvement in evaluation metrics!

Check out the paper for more details!

Paper: https://doi.org/10.1109/LSP.2024.3433568

Code: https://github.com/koc-lab/text-rgnn

Abstract:

Text-graph convolutional network (TextGCN) is the fundamental work representing corpus with heterogeneous text graphs. Its innovative application of GCNs for text classification has garnered widespread recognition. However, GCNs are inherently designed to operate within homogeneous graphs, potentially limiting their performance. To address this limitation, we present Text Relational Graph Neural Networks (Text-RGNNs), which offer a novel methodology by assigning dedicated weight matrices to each relation within the graph by using heterogeneous GNNs. This approach leverages RGNNs, enabling nuanced and compelling modeling of relationships inherent in the heterogeneous text graphs, ultimately resulting in performance enhancements. We present a theoretical framework for the relational modeling of GNNs for text classification within the context of document classification and demonstrate its effectiveness through extensive experimentation on benchmark datasets. Conducted experiments reveal that Text-RGNNs outperform the existing state-of-the-art in scenarios with complete labeled nodes and minimal labeled training data proportions by incorporating relational modeling into heterogeneous text graphs. Text-RGNNs outperform the second-best models by up to 10.61% for the corresponding evaluation metric.